From online government services to interacting with residents on social media, the touch points are ever increasing — as is the need to gather data, document and analyze resident interactions and outcomes.

That’s where artificial intelligence and sentiment analysis can play a major role.

Advances in technology have now put the power of artificial intelligence in the hands of agencies across the country, resulting in positive outcomes for agencies and residents.

In this episode, the “In Case You Missed It” crew talks with Quinn Chasan, Google Cloud’s head of customer analytics for the public sector. Chasan has been with Google more than eight years. During his time with the company, he’s been active with helping state and local agencies tackle old problems in new ways using AI and sentiment analysis.

ON THIS WEEK’S SHOW

- “Google Cloud for State and Local Government”

- “How the State of Oklahoma is Using Data to Fight the Opioid Epidemic”

- Google’s Government and Education Summit

- “Addressing Vaccine Distribution Through Sentiment Analytics”

AN INTERVIEW WITH QUINN CHASAN, GOOGLE CLOUD’S HEAD OF CUSTOMER ANALYTICS FOR THE PUBLIC SECTOR

The following interview was lightly edited for clarity and brevity:

Q: Tell us a little bit about yourself, your background and your role at Google Cloud.

A: I’ve been at Google for eight years, spending half my time between the digital media advertising ad tech/martech side of the house. Before being in cloud, I led all our technical media engagements with the federal, state and local governments. Through that work, I’ve worked in public health trying to bring services to constituents. And then I kind of grew the aperture from there, extending to other government and social services like DMV services, post office, you name it. If you’re engaging with your public-sector agency, I think my team’s role is to help make services as easy as possible for you and make government come closer to your needs. And so that’s really what we’re focused on every day.

Q: Artificial intelligence has been a captivating technology for state and local leaders. But often agencies struggle with the right use cases. Can you share how Google Cloud has helped operationalize AI to aid in the public health domain?

A: It’s such a big field between cybersecurity and privacy and constituent services. Specific to my domain, I think when I think about AI, it’s really about bringing the human touch, the personalized touch from your agency to the constituent, instead of having someone go through a laundry list of services to get what they need.

How do we bring a conversational tone to a chat agent or a call center to bring the service closer to the constituent? How do we use AI to understand folks’ reactions on our websites through advertising to bring them a personalized service or recommendation directly to them? So when I’m thinking about AI, there’s a part of the fundamental basic process. We’re integrating data from different sources that is difficult to combine naturally, we’re trying to organize it that way. We’re doing back office work to extract information from documents, maybe that we need AI to pull entities out of. But I’m more excited on the front end, about how do we use that confluence of information to take the higher level advantages of AI to improve service outcomes without having to hard code each step of the way into policy?

Q: I was wondering if you could talk about sentiment analysis and how it relates to AI. Why is it such a critical data point for state and local agencies?

A: When we say sentiment analysis for Google Cloud, what we really mean is diving through the corpus of feedback that you’re getting as an agency, and is used in a wide variety of areas.

In a public health domain process, what we’re really trying to do is take all the feedback you get and your natural language, this could be from call centers, it could be from search on your website or search from Google, surveys that epidemiologists or other folks are running on the ground, bringing that all together then using tools to say, “Hey, what is the topic of discussion happening in real time? How is that relating to the policies and programs that we’re putting out? And how is the public reacting to that at a local level in multiple languages in real time?”

Read more: “Addressing Vaccine Distribution Through Sentiment Analytics”

And so when we understand sentiment analysis it’s sort of on those two vectors: what the conversation is and what it’s about. And then who is being impacted? And is it a positive or negative feedback? I think it’s a general term that we use, but behind it can be a really powerful mechanism to understand the confluence of touch points that your agency has (with) constituents that might not be in places that you would expect. But those places are really, really valuable and useful for agency missions.

Q: What has the impact been for agencies that have tapped into sentiment analysis? I know your domain of expertise is public health, but maybe you can share with our audience some of those specific use cases?

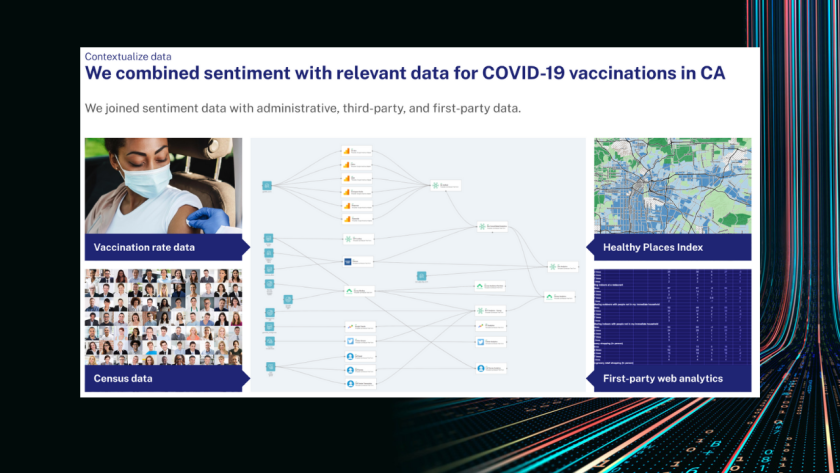

A: I have an example here of some work that was done in the state of California during the COVID-19 pandemic. I won’t get too deep into the weeds here on all the pieces. But you can see each line here represents essentially, for lack of a better term, a data pipeline between different services to integrate them in order to help tackle vaccine distribution efforts that California and the rest of the world had to go through.

So all of this is abstracted away from you. And our goal is to really help provide you the output of that, which is really a policy decision device. So when we’re talking about what it’s used for, a lot of times it’s about logistics decisions. You have a limited set of resources, and you have public-sector folks asking what is the most effective place to put those resources today. And so whether it’s digital media properties, or whether it’s actual boots on the ground for services, understanding the reaction that constituents are having to those policies, and then giving that closed loop reporting back to the public-sector stakeholders is key to making progress in any public-sector initiative, public health or otherwise.

Q: How are you seeing employees responding to having this type of data and outputs available and accessible to them?

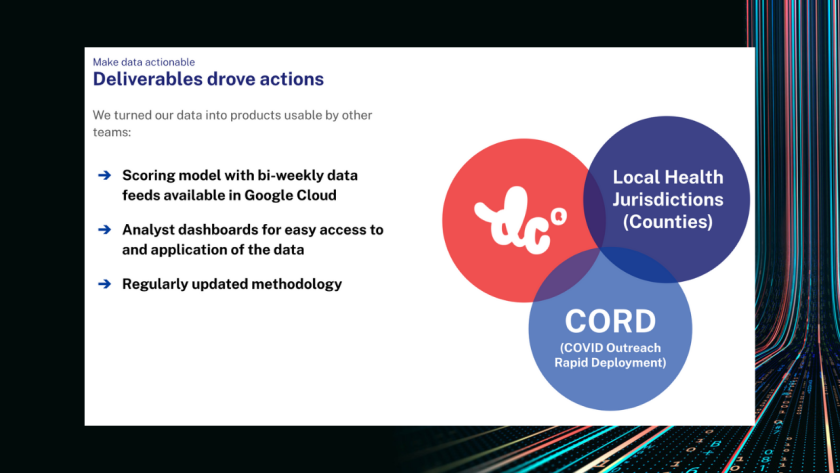

A: We’re in a very different place than we were even two or three years ago. The outputs that we try to make from these processes, maybe five or seven years ago would have to be someone with the research understanding who could hyper-tune a machine learning model in TensorFlow. Today, I feel like we’ve gotten to a place where most of our engagements, we’re often serving up the end result of those models into the same tools and systems that public-sector agencies are using today.

So you can see how it was very complicated work up front to build those AI models with the teams that we’re working with. Google makes that process really easy and really scalable. And most importantly, we’re trying to make the outputs in a way that you’re not diving into and tuning each piece of the model, we’re getting that information into the tools and services that you use today. So you don’t have to sign out and sign into something totally different. If you’re comfortable working with the tools that you’re in, we want to bring that data to you. And so today, I think it’s an entirely different place. And you really shouldn’t think of AI or automation, machine learning, as something threatening, we really want it to be a force multiplier for the work that you do today.

Q: Many agencies that have been interested in the emerging AI technologies like natural language processing, which allows for sentiment analysis, sometimes they struggle with understanding what the implementation process looks like. Could you share some insights into this, for those that struggle with that?

A: The good thing about Google Cloud and natural language processing is it’s the same techniques that happen under the hood for our own user products like Google search and YouTube. So it has to be lightweight and scalable. And for us ... the typical approach is we’ll come in and there’ll be a managed service of six to eight weeks to pull together the data sources. And then we’re sitting with you to help you understand the outputs that you need. And so you can almost think about this in the same way you hope you would have done a classic ETL problem before. We need to take data from one place and make it work with a different system, we need to transform it to play with our semantic database. Now you can do similar work with Google and our partners when it comes to AI and machine learning models. If you say, “Hey, I need to understand XYZ about my constituency. And I like to use CRMs as my interface. I like to use dashboards in my interface.” That’s really the information that we need in order to help you get started and go down that path.

And from a maintenance standpoint, a lot of this work on the back end is actually either no ops, a totally serverless environment that you can do on Google Cloud, or if you have a really intense 100 million terabytes of information that you’re working with, we have managed services supporting that kind of infrastructure work.

But I think with Google, not only can you manage this serverless and fairly easily, but if you have simple data sets you’re looking to work with, in our data warehouse, BigQuery, you can do it with simple SQL. And so we’re trying to lower the bar of difficulty for working with AI and machine learning tools to something that a traditional database specialist could work with today. And so I think getting from that to production is much easier than you might think.

Q: What would your advice be to agencies that don’t have employees that are well versed in AI or machine learning? Or they’re looking to build that expertise, they’re looking to build that capability? They’re looking to take that first step into artificial intelligence deployment? What where should they start? What would your first steps be?

A: From Google’s perspective, the focus on the mission is always somewhere that we recommend starting. If you think about just the ability to pull together information and focus on the areas that are constituent-related, when we’re trying to bring that information together and teach you how we’ve created the models, you have that back-and-forth relationship. It’s something that can teach you how to stand up and add more data sets as you go along.

When we were talking about big data five or seven years ago, it was the beginning of the mobile digital revolution. And the amount of data coming at us is doubling, tripling, quadrupling every year as we’ve offloaded so much of our public decision-making processes as constituents into the digital domain. And so that glut of information is expanding faster than we’ve ever seen it before. Even if you have an AI expert, there may be somebody who’s worked in a field adjacent to what you’re actually trying to do, right? If you’re someone in social media and digital, but you actually need AI machine learning to dive through medical records, right? AI is, I think, not something as unified or thought of in that same way as much as it used to be.

So I recommend starting with the mission outcome, working on that and sort of working backwards from there. Rather than saying, “Hey, I’m gonna hire 10 generalist data scientists who have to deal with streams of data coming at them for the rest of their lives,” teach to fish is how I would think about it. But work backwards from the mission is always our perspective.

Q: What’s coming down the road? What is next for sentiment analysis in the public sector?

A: It’s been crazy to see the development of this work throughout COVID-19, and how many different applications of AI we found throughout that process. And also, how Google supported with our intelligent vaccine impact solution from sentiment analysis and understanding vaccine release to forecasting and modeling efforts to translation services and getting people care and support that they need.

I think public health now has a playbook to take to other areas. We work very closely on the opioid epidemic with other groups. We’ve also worked very closely on back-to-work initiatives and social services and labor. So all those areas that were immediately impacted by COVID are the first users of a lot of these services. So I think that those parts of the government have a lot to teach other core services that have experimented less with machine learning, but are very central from food benefits to DMV services, to transit and departments of transit and climate change. These are all areas that we started having conversations in recently, but I think have a lot to learn from this process and have a lot of upside and the potential to do good. So I’m really excited to see those kinds of fields expanding, and our team is definitely working on that every day.

LEARN MORE

Google products, including Google Cloud, can help government agencies improve citizen services, increase their operational effectiveness and deliver proven innovation. Google Cloud has helped governments worldwide respond to the COVID-19 pandemic. Google Cloud customers include the Chicago Department of Transportation, the city of Los Angeles, the city of Chattanooga, Tenn., the state of Arizona and many others. For more information, visit cloud.google.com.

For more info on how Google Cloud can help government agencies and the public sector connect the dots, visit Google Cloud’s state and local government solutions site.

Follow Google Cloud here: LinkedIn | Twitter | Facebook

COMING SOON

“In Case You Missed It” returns on April 29.

“In Case You Missed It” is Government Technology’s weekly news roundup and interview live show featuring e.Republic* Chief Innovation Officer Dustin Haisler, Deputy Chief Innovation Officer Joe Morris and GovTech Assistant News Editor Jed Pressgrove as they bring their analysis and insight to the week’s most important stories in state and local government.

Follow along live each Friday at 12 p.m. PST on LinkedIn and YouTube.

*e.Republic is Government Technology’s parent company.